This post makes a case for universities investing in people and processes for reviewing research in house before publication. This idea has no doubt been proposed before and is probably already a feature of some academic institutions, but I wanted to clarify here why I think it would benefit academic research.

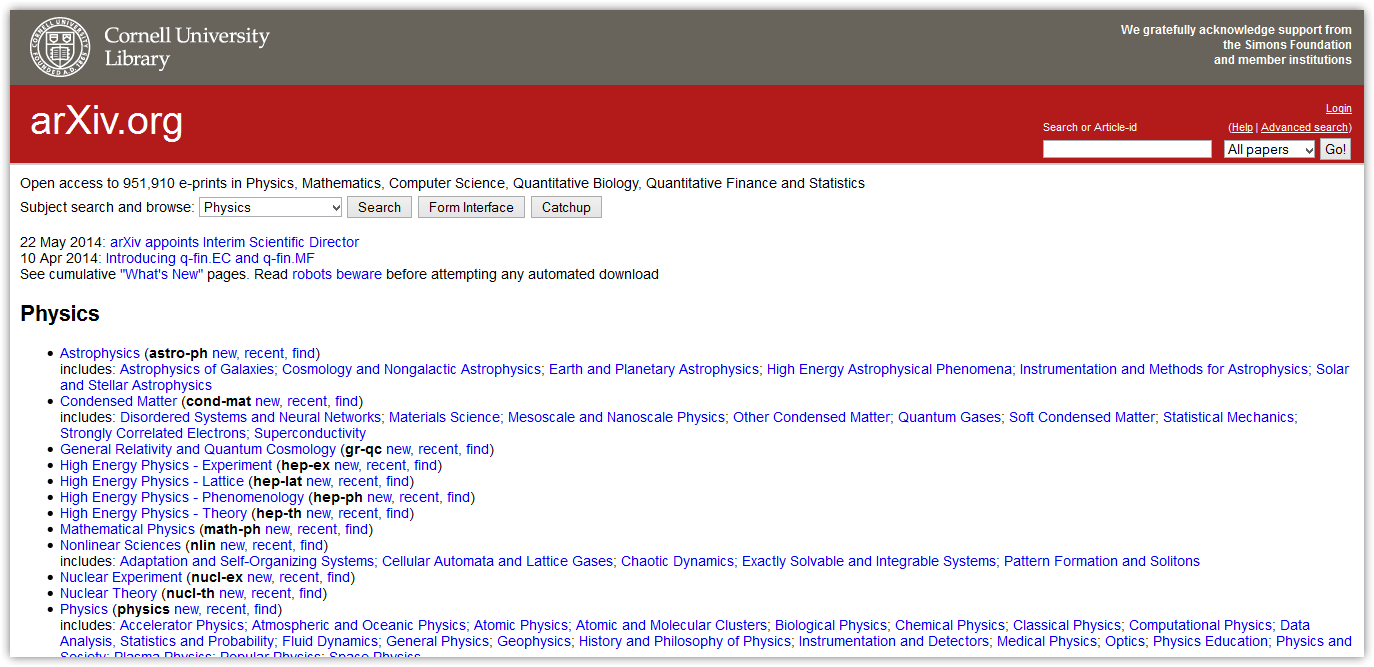

High-energy physics research is often held up as the archetypal open science discipline. Researchers upload their preprints to the arXiv when they are ready to share them, rather than after traditional journal-based peer review, meaning that the discipline itself is almost entirely open access as a result. Cultures of preprinting have been adopted across many other disciplines too and have been hailed as hugely important in the response to the pandemic. Sharing unrefereed research is increasingly commonplace for researchers of many disciplines.

Yet as Kling and McKim wrote in their hugely influential paper on disciplinary publishing differences, the process by which high-energy physics research makes it to the arXiv is not as simple as just throwing out unrefereed research for public consumption. Instead, it reflects an extensive and careful process of evaluation between multiple groups of collaborating institutions:

High-cost (multimillion dollar) research projects usually involve large scientific teams who may also subject their research reports to strong internal reviews, before publishing. Thus, a research report of an

https://arxiv.org/abs/cs/9909008

experimental high-energy physics collaboration may have been read and reviewed by dozens of internal reviewers before it is made public.

Once an experimental high-energy physics article is made public, it has undergone an extensive, internally-managed review process that may be conceived as a kind of peer review. There is care in the process of bringing together the many pairs of eyes that have a stake in the authorship of a paper. Authors have confidence in their preprint not just because they are protected by the safety of hundreds or thousands of co-authors, but because those co-authors have developed internal processes for ensuring confidence in their research.

Although I do not want to reify this or any other kind of peer review as more efficacious in obtaining scientific ‘truth’, it is clear that the reputation physicists have acquired for working entirely in the open may not tell the full story about their review processes. This reputation instead elides the fact that a preprint is often a well-evaluated document rather than a first draft or unfinished research paper, even if it may change according to subsequent feedback or revisions. What I am interested in is whether a similar kind of internal process might be useful across other disciplines too.

I think it’s fair to say that, across all disciplines, many researchers share their work with colleagues before wider dissemination via a journal, preprint server, etc. This could be through informal networks of peers, mentors, or simply other people in your department or lab. But what if there were processes to facilitate and nurture these practices, and what if these processes were made clear to readers at the point of wider dissemination? If an organisation were to formalise and certify this process — devoting resources to organising it according to disciplinary conventions — would this both encourage earlier dissemination of research and give readers confidence that they are not the first people reading it?

I am essentially arguing for a job position within a disciplinary specific-setting, someone who knows intimately the manner and rhythm of their field’s publishing cultures, and who can help organise this process across their department (or wherever). Doing this would shed light on the processes of feedback and revision that took place before the research was disseminated while offering support to others trying to do the same. (It is in no way intended to be punitive.)

I am not arguing that these internal review processes tell us much about the research itself (any more than any peer review process can), only that papers from a specific lab/department/centre/etc. would have broadly acquired feedback in the same way. In externalising these processes and encouraging more people to adopt them, we begin to understand the varying levels of assessment that research receives as it becomes more widely disseminated. This would also work against publishers as the final gatekeepers and would dilute their influence over peer review as a black and white system of verification.

In building the capacity for such a system of feedback, and funding people to make this work, universities would begin to shift culture and reclaim elements of knowledge production from the marketised publishing industry. This approach would encourage better practices prior to sharing un-refereed research, ultimately leading to greater confidence in such research across all disciplines.