UK Research and Innovation today published its updated policy on open access. For journals, the policy is simplified and normalised across the disciplines. Immediate open access under CC BY is mandated (with exceptions considered on a case-by-case basis), meaning no embargoes for green open access. Hybrid publishing will not be funded by UKRI where the journal in question does not have a transitional agreement. All in all, the policy is reflective of the direction of travel towards immediate open access for research articles, something the policymakers feel that the more mature market is now able to accommodate.

The policy also mandates open access book publishing subject to a one-year embargo. Unlike journals, open access is not a dominant method of publishing long-form scholarship. The economics are different for book publishing, including the reliance on specialist editorial and production work that needs to be accounted for, alongside printing and distribution costs (particularly as print sales are likely to be one of the main ways of funding open access books). Many models have been developed to support OA monographs, but no single workable model has emerged.

In recognition of the need to explore new models, UKRI has ear-marked a block grant of £3.5 million to support open access book publishing. Though it isn’t immediately clear what this money can be spent on, it is reasonable to assume that the dreaded book processing charge is one possible approach. Often totalling upwards of £10,000, the book processing charge is a staple model used by commercial publishers for open access books. It is a single payment intended to cover editorial and production costs and mitigate against the loss of revenue implied by giving away a free digital copy. In practice, these same publishers are able to sell print copies through regular channels, and so BPCs (which are eye-wateringly expensive) remove risk for commercial organisations wanting to publish open access while allowing them to monetise books as they have always done. It isn’t a great model for publishing.

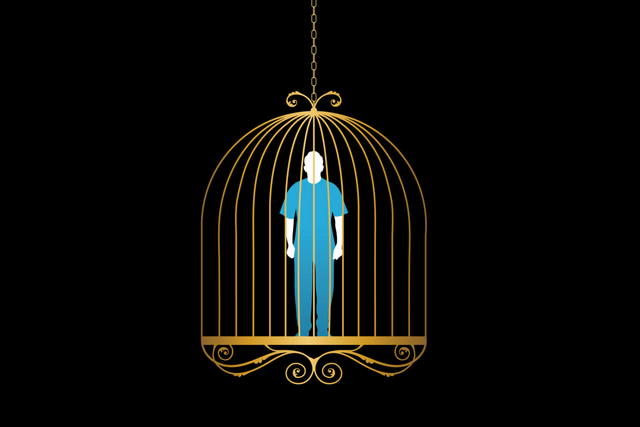

As more prestigious venues will charge more, the BPC will be just as pernicious as the article-processing charge has been for journal publishing. Authors are spending someone else’s money and so there is no reason for them to be price-sensitive, especially given the high reward that prestige offers. Without further intervention, it is likely that freeing up public money through a block grant will cement the BPC as the primary business model for open access books. This will create a two-tiered system whereby researchers with funding can publish open access books, while those without cannot.

It is important to bear in mind that open access book publishing was pioneered by presses that do not require author payment and instead rely on a range of models and subsidies to support their work. The Radical Open Access Collective is home to lots of them, and Lucy Barnes’ twitter thread below illustrates more. Small, often scholar-led presses have been pioneering OA books for years and their contribution needs to be recognised. But how do they access the funding available for open access monographs? Do they have to start charging BPCs — thus rehearsing all the problems with marketisation — or can the money instead be used to directly fund their operations through consortial funding (as the COPIM project is developing) or direct payments to presses? Without this, we’ll see commercial publishers swoop in and snatch through BPCs the funding that UKRI has made available.

The day UKRI releases its policy for #OpenAccess books is a great time to remind everyone that there are award-winning presses publishing OA books without charging BPCs — such as @OpenBookPublish, which has been doing so since 2008.https://t.co/CRYtc85nUI #OAbooks 1/5

— Lucy Barnes (@alittleroad) August 6, 2021

This has always been the main problem with open access policies: they do not take a view on the publishing market, instead merely promoting open over closed access. This not only glosses over the broader motivations for open access, which are about redirecting scholarly communication towards more ethical models and organisations, but also creates new problems by freeing up money that allows commercial publishers to consolidate their power. As with journals, we may well see the emergence of publishing models designed to remove the expert labour and editorial care involved in book publishing (which is already happening in much of the commercial book publishing world) and to automate book production to make it more commercially viable.

But academic book publishing is not and should not be commercially viable — it should be subsidised by universities and made freely available to all who want access. Open access offers the chance to reassess how the market shapes publishing and to return control of it to research communities themselves. It is vital, then, that the block grant for books announced by UKRI can be used to support the alternative ecosystem of open access book publishers and not (simply) those charging BPCs.